Clinically Focused Projects

Dr. Erickson's Radiology Informatics Lab at Mayo Clinic develops CT, MRI and ultrasound tools to identify and classify imaging biomarkers. Investigators hope these tools can help fine-tune treatment options and improve patient outcomes.

Abdominal organs

Adrenal masses

This project seeks to identify imaging-based biomarkers that predict whether an adrenal mass could be malignant and require surgery.

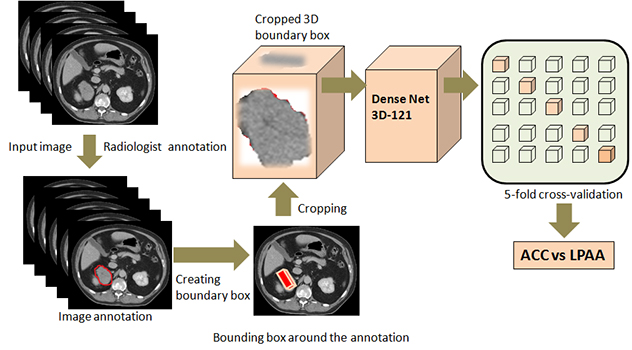

Visual overview of adrenal masses

Visual overview of adrenal masses

This schema illustrates the lab's pipeline for telling stages 1 and 2 adrenocortical carcinoma apart from large, lipid poor adrenal adenoma.

Artificial intelligence (AI)-enhanced granular renal contrast phase detection

This study combines deep learning and regression to further analyze renal contrast phases in CT scans. This is crucial to evaluate renal function. Using deep learning's contrast detection and regression's precision, it distinguishes noncontrast and eight CT phases, including early to late stages of corticomedullary, nephrographic and pyelographic phases. Features derived from deep learning aid a random forest model, offering numerical values to precisely and more accurately determine the proper phase.

Body composition analysis

Most CT and MRI exams of the abdomen are done to assess the condition of abdominal organs. But body composition can help predict health and the ability to withstand great stresses such as major surgery. In this project, the lab uses images of the abdomen to compute specific markers of body composition. These markers include fat within and outside of the abdomen and the thickness of the abdominal musculature. Then the lab assesses these images to predict health and survival. The lab also is examining related biomarkers to see whether they can predict health.

Cholangiocarcinoma

This project combines topological data analysis and deep learning to predict cholangiocarcinoma. It uses MRI to make diagnoses more accurate.

Deep-learning-based decision support tool for distinguishing uterine leiomyosarcomas

Despite recent advances in imaging, it is still challenging to tell uterine leiomyosarcoma, a rare but aggressive cancer, from noncancerous leiomyomas. Recently, healthcare professionals have increased their use of AI and deep-learning techniques, including in diagnostic imaging. The lab team hypothesizes that cutting-edge texture analysis, machine learning and AI-based approaches can revolutionize the role that radiologic imaging plays in diagnosing uterine tumors.

Deep-learning analysis of biopsy images for prognostic markers of chronic kidney disease

This study investigates whether deep learning can be an efficient alternative to traditional manual methods of assessing chronic kidney disease risks. The Radiology Informatics Lab applied a deep-learning model to kidney biopsy images to automatically estimate microstructures in the kidneys and identify prognostic markers for chronic kidney disease. The research team is matching metrics from deep learning to manual evaluations to find correlations with clinical characteristics.

Kidney cyst segmentations

Kidney cyst segmentations

This example of segmentations of kidney cysts in MRIs of patients with autosomal dominant polycystic kidney disease were obtained manually using the lab's automatic deep-learning model.

Deep learning for automated segmentation of polycystic kidneys

While MRI is commonly used for people with polycystic kidney disease, ultrasound is portable and cost-effective. The lab believes deep learning can efficiently automate segmentation of kidney images in people with polycystic kidney disease. The lab is exploring how effective ultrasound is at classifying polycystic kidney disease to see whether it can be an alternative or supplementary imaging method.

Detection of multiple myeloma lesions using whole-body CT scans

Dr. Erickson's lab is developing a fast and accurate computer-assisted tool to detect multiple myeloma lesions on whole-body, low-dose CT scans.

Improvement of renal vessel segmentation using tubular filters

The lab is working to enhance renal vessel segmentation that involves using tubular filters. The team designed this method to refine and define the intricate structures in medical-imaging data. Using tubular filters isolates and highlights the tubular nature of renal vessels. This makes segmentation outcome more specific and accurate.

Pancreatic fat estimation for risk factor assessment

Excess fat in the pancreas can affect pancreas health. Standard ways of measuring and reporting fat fraction have not yet been established. The lab is studying how AI models may be able to estimate pancreatic fat automatically for radiologists in the future.

Polycystic kidney disease biomarkers

This project develops tools to help healthcare teams estimate the expected development of polycystic kidney disease and plan treatments for patients. By efficiently characterizing disease, the lab can better assess the disease state and measure the benefits of possible therapies.

Predictive analysis of primary sclerosing cholangitis

This project uses deep learning and topological data analysis to examine MRI scans. The project aims to predict outcomes in people with primary sclerosing cholangitis. Using advanced analytical techniques, the research team seeks to make diagnoses more accurate and provide insights into disease progression and outcomes. These techniques offer a new approach to understanding and managing primary sclerosing cholangitis.

Imaging analysis for primary sclerosing cholangitis

Imaging analysis for primary sclerosing cholangitis

This in-depth workflow diagram shows the use of algebraic topology-based machine learning to analyze imaging signals for diagnosing primary sclerosing cholangitis.

Prostate cancer

This project aims to develop a process to detect clinically significant prostate cancer, predict the outcomes using MRI and evaluate clinical outcomes.

Head, neck and spine

3D classification of pseudoprogression and true progression in glioblastoma

For people with glioblastoma who are undergoing chemotherapy and radiation therapy, a critical and unresolved challenge is telling pseudoprogression from true progression. A misdiagnosis can lead to incorrect treatments, misinterpreted trial results and increased anxiety in patients. This project aims to develop a model to tell glioblastoma's true progression from pseudoprogression using MRI.

Classification of brain lesions

This project uses deep learning with MRI to group tumefactive brain lesions into these classifications: gliomas, metastases, lymphomas and tumefactive multiple sclerosis lesions.

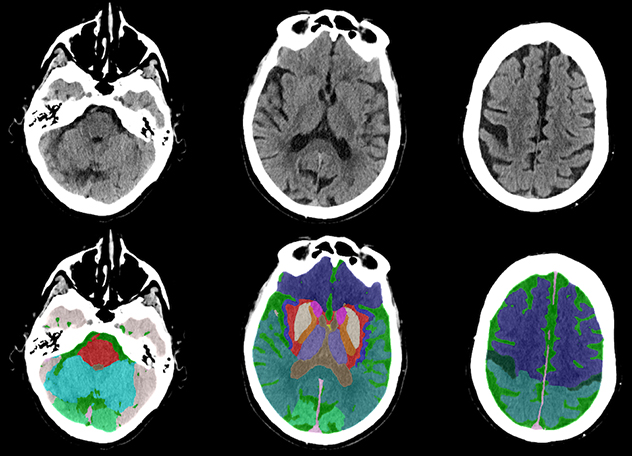

Head CT scans

Head CT scans

This fully automated anatomical segmentation of head CT scan was obtained with the lab's deep-learning model.

CT brain segmentation and stroke detection

Ischemic stroke is one of the leading causes of cardiovascular disease and death. Although CT is essential in evaluating people suspected of having had a stroke, it is challenging for radiologists to identify ischemic regions from noncontrast CT alone. In this project, the lab aims to develop an algorithm that uses segmentation and classification models to automatically identify and locate ischemic stroke regions.

Deep learning to determine gene status in glioblastoma multiforme

Dr. Erickson's team is developing a deep-learning model to predict methylation status in the MGMT gene in people with glioblastoma multiforme. This model uses different brain MRI sequences.

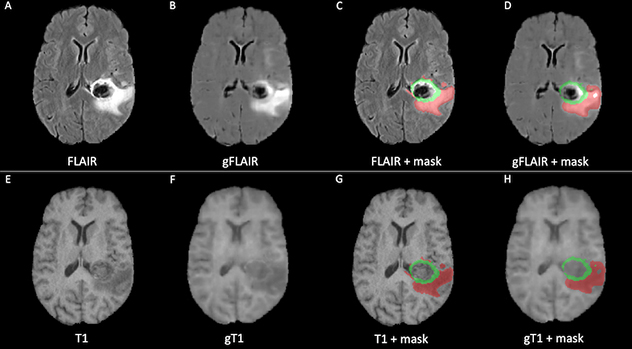

Brain MRI

Brain MRI

This example of brain MRI was synthetized using generative adversarial networks (GANs) (B, F) and the corresponding original MRI (A, E). The synthetized MRI can be effectively used to trace brain lesions (D, H) with accuracy comparable to the original masks (C, G).

Deep-learning tool for the early diagnosis of progressive supranuclear palsy

This project investigates structural and volumetric changes in the brainstem and midbrain in patients with progressive supranuclear palsy versus patients without the disease and patients with Parkinson's disease. The lab is creating two segmentation models and one classifier for this project.

Detection and localization of cervical spine fractures

This project aims to detect and locate cervical spine fractures from spinal CT scans. In addition, the project aims to apply large language models to extract information regarding cervical spine fractures from radiology reports of the patients.

Detection of focal cortical dysplasia and posttreatment outcomes of epilepsy

The goal of this project is to develop a deep-learning tool that combines MRI scans, positron emission tomography (PET) scans, electroencephalograms and pathology data to automatically detect and segment focal cortical dysplasia lesions. The lab's approach involves conducting radiomics and image-based deep-learning analyses of MRI to evaluate the outcomes of drug or surgical treatments in people with epilepsy.

Generative adversarial networks to synthetize brain MRI

Missing brain MRI sequences are a major obstacle to developing new deep-learning models and applying existing models. For this project, the lab aims to synthesize missing brain MRI sequences using generative adversarial networks as substitutes for real MRI scans. To understand if these images could one day represent a valid alternative to real MRI scans, the lab is assessing the quality of the generated images and using them as inputs of existing deep-learning models. The same approach could be extended to different forms of imaging.

Glioblastoma progression and pseudoprogression biomarkers

This project is developing a biomarker that uses early MRI to tell if early enhancement is due to tumor progression or caused by treatment effects that mimic tumor progression — also called pseudoprogression. An accurate determination of true progression versus pseudoprogression is critical. Effective therapy should continue during treatment, but if the tumor is progressing, second line agents can be beneficial.

Multitask brain tumor inpainting with diffusion models

This project presents a powerful tool that uses noise-reducing diffusion probabilistic models for medical imaging, particularly focusing on brain MRIs. The tool can inpaint synthetic yet highly realistic tumoral lesions into brain MRI slices. It also can remove brain tumors to produce healthy-looking MRI slices.

In the context of image processing and computer graphics, "inpainting" refers to the technique of reconstructing lost or deteriorated parts of images and videos. The primary goal of inpainting is to fill in the missing or damaged regions in a visually plausible manner so that the repaired area blends seamlessly with the surrounding image. Dr. Erickson's lab covered parts of brain imaging that contained tumoral lesions and tasked the model with reconstructing those parts. This way, the research team can later cover parts of a healthy brain image and ask the model to generate a tumor within that part, as if the imaging came from a patient with a real tumor.

This advanced capability addresses the challenge of dataset scarcity and imbalance. It enhances how well deep-learning models can evaluate and analyze medical imaging.

Thyroid ultrasound evaluation of nodules

Thyroid nodules are common, but it can be hard to tell noncancerous nodules from cancerous ones. And not all cancerous nodules need to be treated aggressively. This project attempts to use ultrasound imaging to identify whether nodules are cancerous and aggressive.

Heart and cardiovascular system

Classification of cardiac diseases

This project applies deep-learning methods to cardiac ultrasound imaging — also known as echocardiography — to classify diseases of the heart and cardiovascular system.

Coronary artery disease

This project uses algebraic topology to identify patterns and extract important information from imaging data related to coronary artery disease.

Esophagus

Detection of Barrett esophagus dysplasia

The Radiology Informatics Lab is developing a computer-assisted tool to detect grades of Barrett esophagus dysplasia using whole-slide imaging of pathology slides.

Musculoskeletal

Angle calculator for femoroacetabular impingement syndrome

The Radiology Informatics Lab is developing an automated angle calculator that will measure the important angles to diagnose femoroacetabular impingement syndrome (FAIS) using hip CT scans.

Deep learning to create a total hip arthroplasty radiography registry

This project used deep-learning algorithms to establish an automated registry of hip and pelvic radiographs from people who had had total hip replacements. The algorithms curated and annotated 846,988 Digital Imaging and Communications in Medicine (DICOM) files, achieving 99.9% accuracy. This work was done by identifying radiographs, ensuring proper annotation and automatically measuring acetabular angles. The automated system supports patient care, longitudinal surveillance and large-scale research, presenting a model that can be adapted for other anatomical areas and institutions.

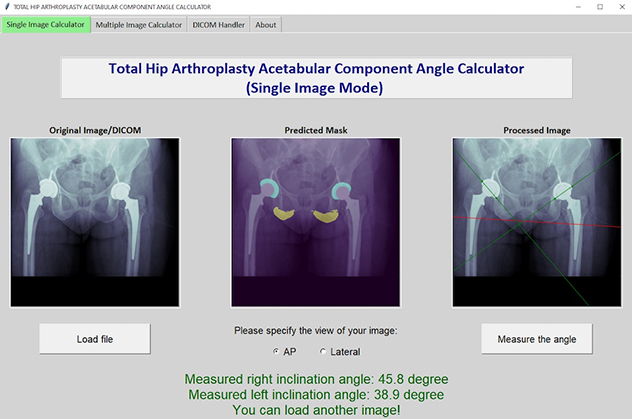

X-ray model tool

X-ray model tool

The lab's deep-learning model segments hip landmarks and automatically measures acetabular angles on X-rays of patients receiving total hip replacements.

Deep learning to measure acetabular component angles after total hip replacement

Placing acetabular components correctly is important for total joint replacements to succeed and last. This project focused on developing a deep-learning tool to automatically measure acetabular component angles in postoperative radiographs after total hip replacement. Manual measurements are time-consuming, and different people may get different results. This tool addresses those problems.

The tool uses two U-Net convolutional neural network models. It achieves high accuracy and a close mean difference between human and machine measurements, with minimal discrepancies. This development greatly advances the precise and efficient evaluation of acetabular component positions in clinical and research settings for total hip replacement.

Deep learning to measure femoral component subsidence after total hip replacement

Femoral component subsidence can indicate complications after total hip replacement. The Radiology Informatics Lab has developed and evaluated a deep-learning tool to automatically measure femoral component subsidence using two serial anteroposterior hip radiographs.

The tool is based on a dynamic U-Net model. The research team trained it using 500 anteroposterior hip radiographs. It was highly accurate and correlated strongly with manual measurements made by orthopedic surgeons. The tool showed no great differences or systematic discrepancies. Unlike manual methods, this tool requires no user input, showcasing its potential to efficiently and accurately assess femoral component subsidence after total hip replacement.

Deep-learning AI model to assess hip dislocation risk after total hip replacement

This project developed a convolutional neural network model to assess the risk of hip dislocation after total hip replacement. The model uses retrospective evaluations of radiographs from a vast cohort.

The model has achieved high sensitivity and negative predictive value. It efficiently identifies and centers on crucial radiographic locations, such as the femoral head and acetabular component, to precisely assess risk. This advancement illustrates the transformative potential of automated imaging AI models in orthopedics. It also helps healthcare professionals more quickly and accurately identify people at high risk of dislocation after total hip replacement.

THA-AID: Deep learning for automatic implant detection in total hip replacement

THA-AID is a deep-learning tool to automatically identify in situ implants in revision total hip replacement.

The lab trained THA-AID on 241,419 radiographs. It can accurately identify 20 femoral and eight acetabular components from various radiographic views. It also incorporates uncertainty quantification and outlier detection. The tool demonstrates exceptional performance, outperforming existing approaches by offering full classification capabilities and robustness against various test sets and radiographic views.

THA-Net: Deep learning for templating and patient-specific surgical execution in total hip replacement

THA-Net is a deep-learning algorithm that can create synthetic postoperative total hip replacement radiographs.

The lab trained THA-Net on 356,305 paired radiographs. The algorithm replaces the target hip joint with user-specified total hip replacement implants in preoperative images. It achieves higher surgical validity scores than actual postoperative radiographs. THA-Net's synthetic images are very realistic, greatly advancing patient-specific surgical planning and the integration of technologies such as robotics and augmented reality in total hip replacement procedures.

Ultrasound imaging for carpal tunnel syndrome

Carpal tunnel syndrome involves numbness from median nerve compression. Accurate in situ nerve morphology measurements are essential to diagnose carpal tunnel syndrome. This project uses ultrasound imaging to automatically segment images of the median nerve in people with carpal tunnel syndrome. The lab hypothesizes that changes in nerve shape and volume indicate changes in nerve function and predict treatment results. Because ultrasound is noninvasive and cost-effective, it is ideal for this. AI-enhanced ultrasound can provide insights into carpal tunnel syndrome to help radiologists.